Algorithm of Thoughts Prompting

Prompt engineering series on techniques eliciting reasoning capabilities of LLMs

Original Paper: Algorithm of Thoughts: Enhancing Exploration of Ideas in Large Language Models

Abstract

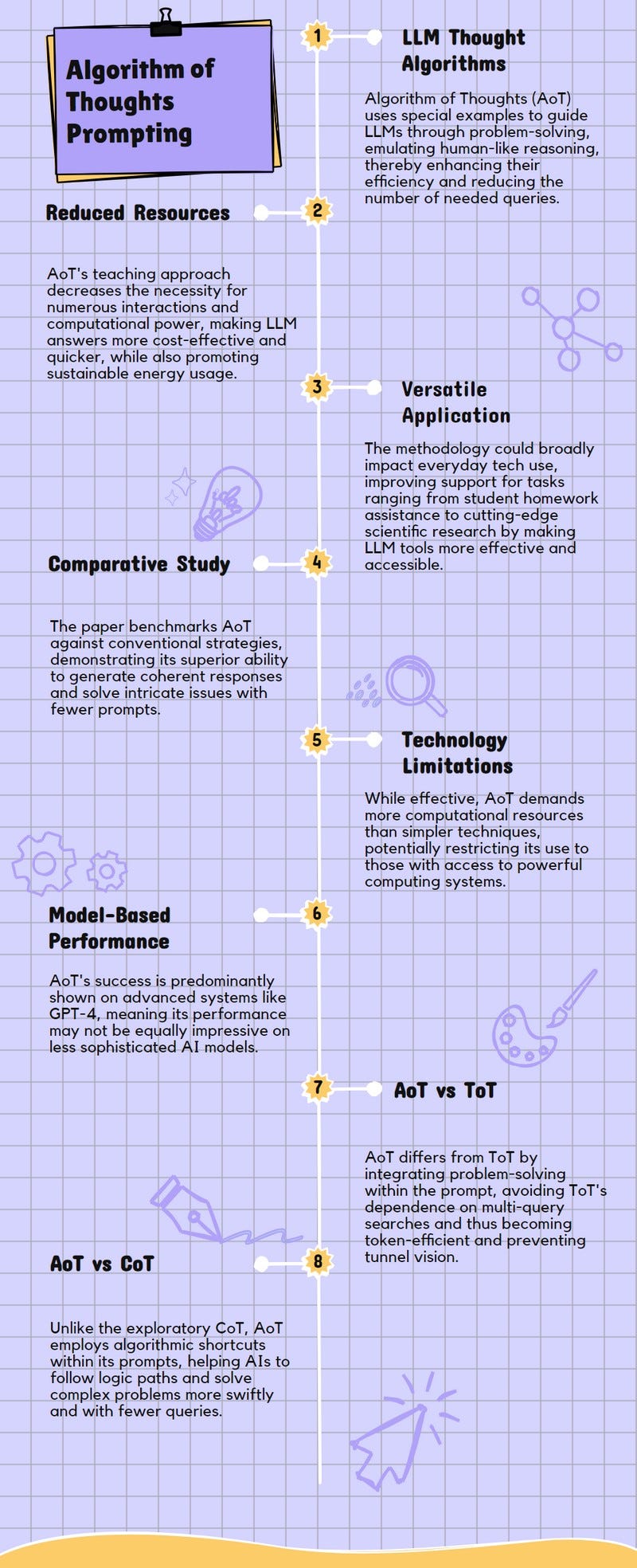

This paper introduces a new way to help big computer programs think better and find ideas more easily by using special examples, aiming to make them smarter without asking too many questions.

It shows that teaching these programs in a certain way can make them even better at solving problems than the original methods used, making them more efficient and smarter.

Practical Implications

This paper's approach significantly reduces the need for multiple questions to be asked by LLMs when solving problems, making it cheaper and faster to get answers while using less computer power and energy.

By teaching these programs to think through problems more like a human would, using steps and examples, they can solve complex tasks more effectively, potentially even better than the methods they were taught.

The method could make technology smarter in everyday applications, from helping students with homework to assisting researchers in solving advanced scientific questions, by making these tools more accessible and efficient.

It also addresses environmental concerns by lowering the energy consumption of data centers that power these large computer programs, contributing to more sustainable technology use.

Methodology

The paper introduces the "Algorithm of Thoughts" method, which guides large language models through problem-solving using algorithmic reasoning, reducing the need for multiple queries and computational resources by leveraging examples that mimic algorithmic thinking.

It compares this new method against traditional approaches like standard prompting and Chain-of-Thought, using creative tasks and games to demonstrate its effectiveness in generating coherent narratives and solving complex problems with fewer queries.

By employing algorithmic examples within the model's prompts, this approach enables the model to explore ideas and solutions more efficiently, potentially surpassing the performance of the algorithms themselves, indicating a significant advancement in model reasoning capabilities.

Limitations

The Algorithm of Thoughts (AoT) method requires more resources than standard prompting and Chain-of-Thought due to its extensive exploration of ideas through token generation, making it more demanding in terms of computational power and memory usage.

Although AoT reduces the number of queries compared to Tree of Thoughts (ToT), it still demands significant computational resources, which might not be feasible for all users or applications, especially those with limited access to advanced computing facilities.

The effectiveness of AoT is primarily demonstrated on GPT-4, suggesting that its performance might not be as impressive on less advanced models, which could limit its applicability across different platforms and technologies.

Conclusion

The Algorithm of Thoughts (AoT) method significantly reduces the need for multiple queries when solving problems, making it more efficient than previous methods like the Chain-of-Thought or Tree of Thoughts, by using fewer resources while maintaining high performance levels.

AoT has shown the potential to outperform the algorithms it is based on, suggesting that large language models can integrate their own 'intuition' to optimize problem-solving beyond the original algorithmic instructions.

Despite its advantages, AoT still demands more computational resources than simpler methods, and its optimal performance is primarily observed with advanced models like GPT-4, indicating a limitation in accessibility for users with less powerful technology.

How Algorithm of Thoughts prompting is different from Tree of Thoughts prompting?

Algorithm of Thoughts (AoT) prompting uses a novel strategy that guides large language models through algorithmic reasoning pathways, allowing them to explore ideas with fewer queries, unlike Tree of Thoughts (ToT) which relies on an external, multi-query tree search algorithm to enhance reasoning capabilities.

AoT capitalizes on the model's ability to follow algorithmic examples within a single or minimal number of prompts, thereby reducing the computational and memory overheads associated with the ToT's extensive query requirements.

While ToT performs an extensive external search to find solutions, AoT integrates the search process within the context, making it more efficient by leveraging the model's inherent capabilities to generate and evaluate potential solutions in a streamlined manner.

AoT's approach is designed to be more token-efficient and aims to prevent the model from getting stuck in a 'tunnel-vision' during the search, a feature that distinguishes it from the ToT's reliance on broader, more computationally intensive search mechanisms.

How Algorithm of Thoughts prompting is different from Chain-of-Thoughts prompting?

Algorithm of Thoughts (AoT) prompting guides large language models through specific reasoning paths using algorithmic examples, making it efficient with fewer queries, while Chain-of-Thoughts (CoT) prompting lays out a step-by-step reasoning process without specifically designed pathways, often requiring more input for complex problems

AoT is designed to leverage the model's inherent ability to follow algorithmic logic, potentially enhancing its problem-solving capabilities beyond the original algorithm, whereas CoT relies on the model to sequentially work through a problem as a human might, without the added efficiency of algorithmic shortcuts

The AoT approach aims to minimize computational and memory overhead by reducing the number of queries needed, contrasting with CoT, which can increase these demands due to its less structured, more exploratory nature.

Paper Infographic