Chain-of-Knowledge: Grounding Large Language Models via Dynamic Knowledge Adapting Over Heterogenous Sources

Prompt engineering series on techniques eliciting reasoning capabilities of LLMs

This is a non-technical explanation of the original paper - Chain-of-Knowledge: Grounding Large Language Models via Dynamic Knowledge Adapting Over Heterogenous Sources

Abstract:

This paper introduces a new system called Chain-of-Knowledge (CoK) that makes LLMs better at giving answers based on real facts by using a wide range of information sources.

CoK works by first guessing answers to questions, then checking and improving these guesses with information from different places like websites and databases, to make sure the final answers are accurate and trustworthy.

Practical Implications:

The Chain-of-Knowledge (CoK) framework significantly improves the accuracy of large language models by grounding their responses in verified information, reducing the chances of generating incorrect or made-up answers.

By incorporating both structured and unstructured data sources, CoK ensures that the information used to answer questions is more reliable, addressing critical issues like misinformation and data reliability.

CoK's adaptive approach to querying different types of knowledge sources makes it versatile and applicable across various domains, potentially enhancing the quality of automated responses in fields ranging from medicine to physics.

The methodology behind CoK, which involves correcting and refining answers through progressive knowledge adaptation, could lead to advancements in how AI systems learn and improve over time, making them more effective tools for researchers and professionals.

Methodology:

The Chain-of-Knowledge (CoK) framework enhances large language models by dynamically incorporating information from a variety of sources, aiming to provide more accurate and fact-based answers by going through stages of reasoning preparation, dynamic knowledge adapting, and answer consolidation.

CoK uses an adaptive query generator to create queries suitable for different types of data sources, including structured databases and unstructured text, allowing it to fetch relevant information across various knowledge domains.

To ensure the information used is reliable, CoK selects authoritative knowledge sources and employs a progressive rationale correction step, where each rationale is corrected and improved based on the newly adapted knowledge before moving on to the next.

The framework is tested across multiple knowledge-intensive tasks, demonstrating its ability to significantly reduce errors and improve the factual accuracy of the generated content compared to existing methods.

Limitations:

The Chain-of-Knowledge (CoK) framework's accuracy depends on the reliability of external knowledge sources, meaning if these sources contain incorrect information, CoK might also generate inaccurate answers.

There's a possibility of conflicts between different knowledge sources, which could lead to challenges in determining the most accurate information to use in the CoK framework.

The effectiveness of CoK is partly limited by the knowledge retrieval step; if it fails to fetch relevant facts, the output may not be useful despite the framework's advanced capabilities.

CoK's performance is also tied to the reasoning capabilities of large language models; if these models fail in their reasoning tasks, CoK might not be able to correct these errors effectively.

Conclusion:

The Chain-of-Knowledge (CoK) framework significantly improves the accuracy of large language models by using a variety of information sources, making it a promising solution for generating knowledge-based answers.

CoK's adaptive query generator is a key innovation that supports both structured and unstructured queries, enhancing the model's ability to fetch relevant information from diverse sources.

Through extensive testing, CoK has shown to outperform existing methods in tasks requiring deep knowledge, demonstrating its effectiveness across different domains.

How does Chain-of-Knowledge prompt technique is different from other prompting techniques?

Chain-of-Knowledge (CoK) dynamically incorporates grounding information from heterogeneous sources, enhancing factual correctness and reducing hallucinations in generated content, unlike traditional prompting techniques that rely solely on the model's pre-existing knowledge.

CoK involves a three-stage process: reasoning preparation, dynamic knowledge adapting, and answer consolidation, which is a structured approach to systematically refine the model's outputs based on external knowledge sources, setting it apart from simpler, one-step prompting methods.

The framework uses an adaptive query generator to access both structured and unstructured knowledge sources, allowing for a more versatile and accurate retrieval of information compared to other techniques that may not dynamically adapt to different types of knowledge sources.

Chain-of-Knowledge Prompting Template

Start by identifying the main question or problem you need to solve. This helps the model understand what information is being sought.

Generate preliminary rationales or thoughts about the question. This step involves thinking about possible answers or explanations for the question.

Use the adaptive query generator to fetch relevant information from both structured and unstructured knowledge sources. This is crucial for gathering accurate data to support your answer.

Correct the initial rationales based on the new information obtained. This step ensures that any inaccuracies are addressed before finalizing the answer.

Consolidate the corrected rationales to form a comprehensive and accurate final answer. This last step combines all the refined information into a clear response to the original question.

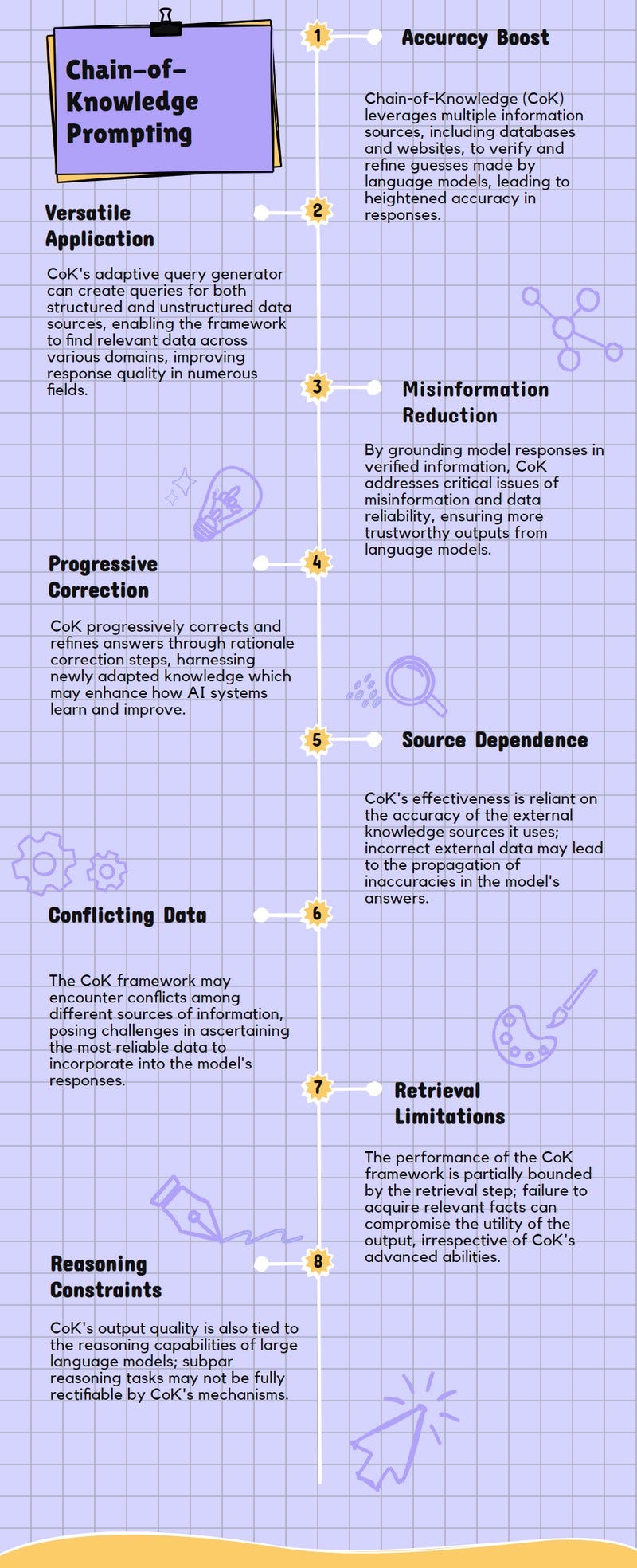

Paper Infographic