Chain-of-Symbol Prompting for Spatial Relationships in Large Language Models

Prompt engineering series on techniques eliciting reasoning capabilities of LLMs

This is a non-technical explanation of the original paper - Chain-of-Symbol Prompting for Spatial Relationships in Large Language Models

Abstract:

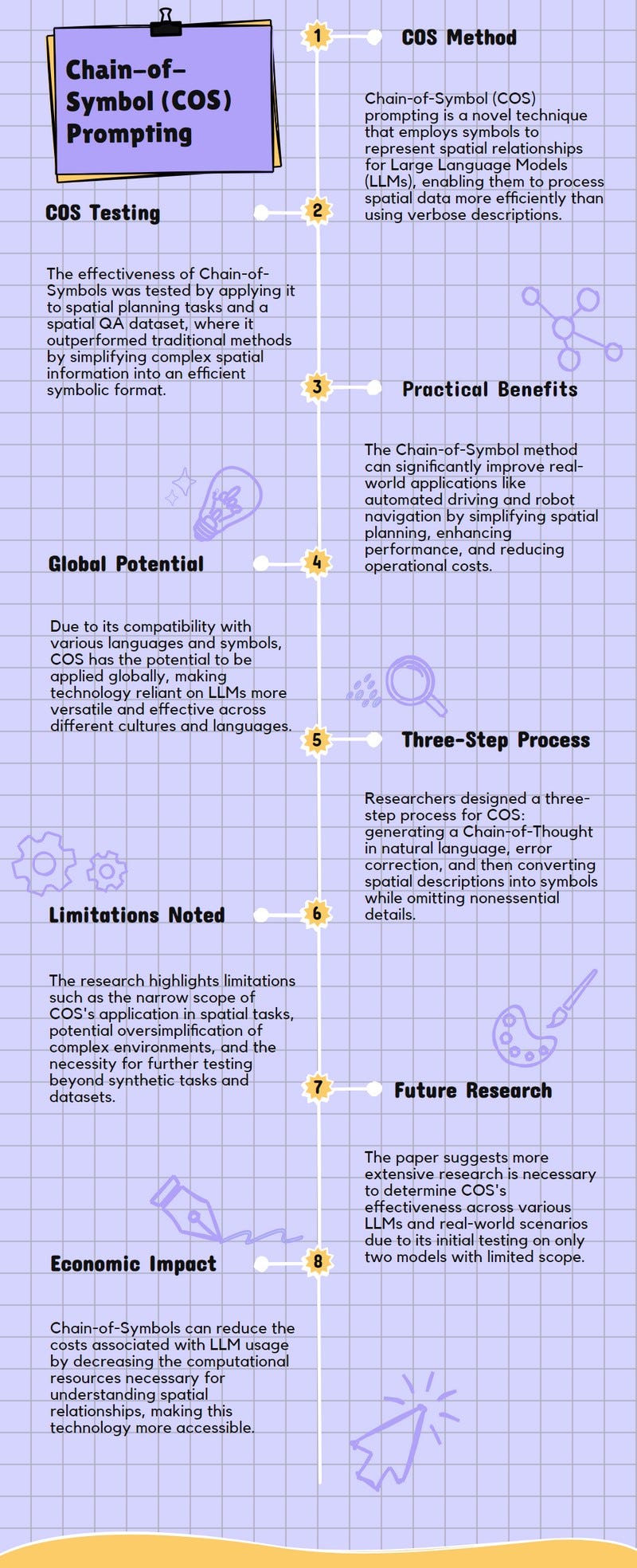

This paper explores how LLMs understand and plan around where things are located using a new method called Chain-of-Symbol, which uses simple symbols instead of long sentences to show relationships between objects in space.

By testing this method on different tasks that involve figuring out how objects are arranged, the researchers found that Chain-of-Symbols makes these LLMs much better at solving these problems, using fewer steps and simpler information than before.

Practical Implications:

The new method, Chain-of-Symbol, makes LLMs much better at understanding and planning in spaces by using simple symbols instead of long descriptions, which can help in tasks like navigating a robot through a room or planning a route on a map more efficiently.

By reducing the amount of information needed to understand spatial relationships, Chain-of-Symbols can save on the costs of using these LLMs, making advanced technology more accessible to more people and projects.

The improvement in performance and efficiency with Chain-of-Symbols has the potential to enhance applications in real-world scenarios, such as in automated driving systems, where understanding spatial relationships quickly and accurately is crucial.

Chain-of-Symbol’s ability to work well with different languages and symbols suggests it could be used globally, making technology based on large language models more versatile and useful across various cultures and languages.

Methodology:

The researchers introduced a novel method called Chain-of-Symbol (COS) prompting, which simplifies how LLMs understand where things are by using symbols instead of words to describe the space around objects.

They developed a three-step process for creating Chain-of-Symbols demonstrations, starting with generating a Chain-of-Thought (CoT) in natural language, correcting any errors, and then replacing spatial descriptions with symbols while removing unnecessary details.

To test Chain-of-Symbols, they compared it against the traditional CoT method by applying both to spatial planning tasks and a spatial question-answering dataset, showing that COS was more effective and efficient.

Limitations:

The paper primarily focuses on spatial understanding and planning tasks, which might not cover the full range of challenges LLMs face in understanding complex spatial environments, indicating a narrow scope of application for the COS method.

While COS shows significant improvements in handling spatial relationships, the paper does not deeply explore the adaptability of COS across different types of LLMs beyond those tested, leaving questions about its universal applicability.

The research relies on symbolic representations which, although efficient, might oversimplify complex spatial scenarios, potentially limiting the model's ability to understand nuanced spatial relationships.

The effectiveness of COS in real-world applications remains somewhat speculative, as the paper primarily evaluates the method through synthetic spatial planning tasks and a specific spatial QA dataset, which may not fully represent real-world complexity.

Conclusion:

The researchers found that current large language models (LLMs) struggle with understanding and planning tasks that involve figuring out where things are in space, which led them to create a new method called COS (Chain-of-Symbol Prompting) to make it easier for these models to understand spatial relationships by using symbols instead of words.

COS method, which simplifies spatial descriptions into symbols, significantly improves the performance of LLMs on spatial tasks, showing much better results than the traditional way of describing space with words, and it also uses fewer words to do this, making it faster and cheaper to use.

Despite its success, the paper mentions that they only tested COS on two models due to limited time and resources, suggesting that more research is needed to see if COS works well on other models too.

Paper Infographic