Chain-of-Verification Prompting

Prompt engineering series on techniques eliciting reasoning capabilities of LLMs

Original Paper: Chain-of-Verification Reduces Hallucination in Large Language Models

Abstract

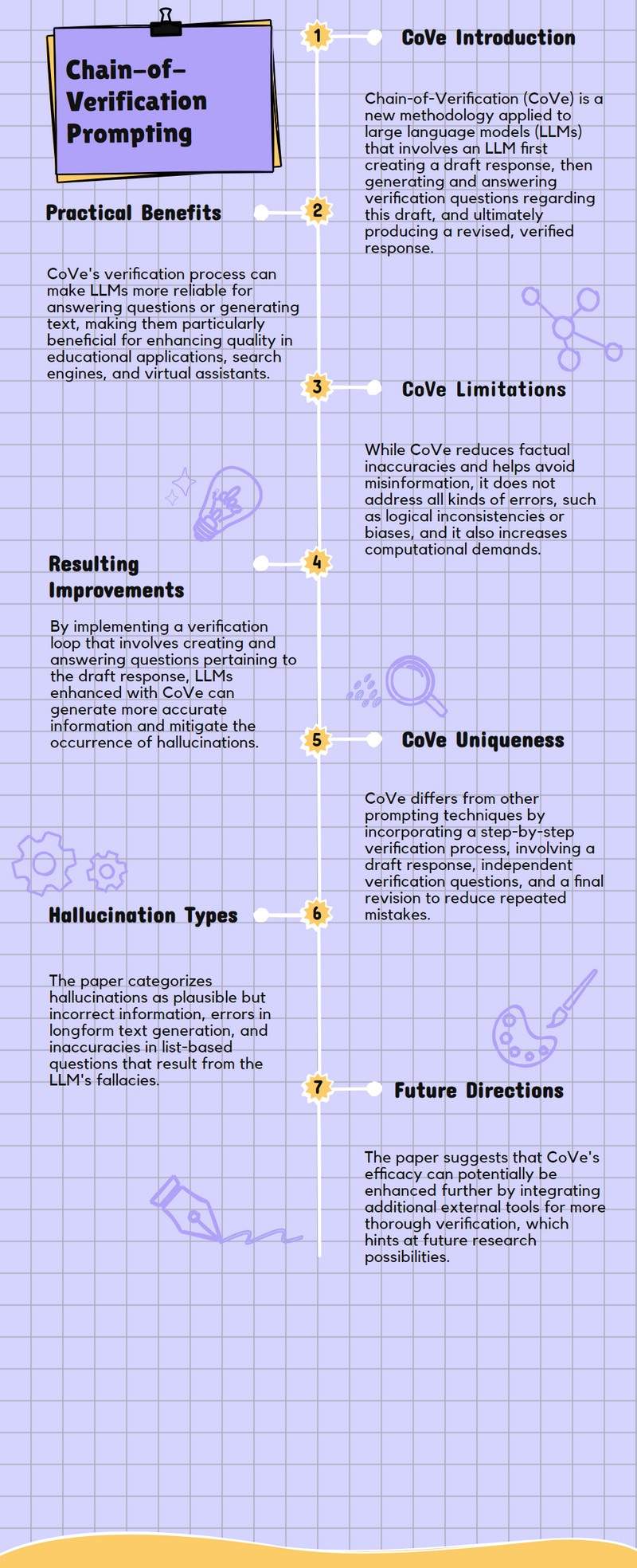

This paper talks about a new way called CoVe to help LLMs not to make mistakes by checking their work with questions and answers before giving a final answer.

By doing this, the paper shows that the LLMs can be more accurate and make fewer errors when answering different types of questions or creating long pieces of text.

Practical Implications

By using the Chain-of-Verification (CoVe) method, LLMs can reduce mistakes by asking themselves questions to double-check the information before finalizing an answer, making them more reliable for tasks like answering questions or generating text.

This approach can improve the quality of answers in general question-answering tasks and long-form text generation by making sure the information provided is more accurate, which is especially useful in educational tools, search engines, and virtual assistants.

Although CoVe helps in reducing errors, it doesn't completely eliminate them, which means users should still critically evaluate the information provided by these LLMs, especially in high-stakes scenarios like medical advice or legal information.

The method also increases the computational cost due to the need for generating additional verification steps, which might limit its use in devices with lower processing power.

Methodology

The paper introduces a method called Chain-of-Verification (CoVe) which involves a large language model first creating a draft response, then generating and answering verification questions to check the draft, and finally producing a verified response to reduce errors.

CoVe uses a few-shot prompt approach for verification planning, where the model is given examples of how to perform the verification process, aiming to decrease the chance of making mistakes in the final answer.

The paper also explores a "factored" version of CoVe, where the model answers verification questions independently without referring to its previous answers, to prevent repeating the same mistakes.

Additionally, the research mentions the possibility of enhancing CoVe by incorporating external tools for further verification, suggesting a future direction for improving the method's effectiveness.

Limitations

The Chain-of-Verification (CoVe) method does not completely eliminate incorrect or misleading information, meaning it can still make mistakes even though it improves accuracy over previous methods.

CoVe focuses on reducing factual inaccuracies but does not address other types of errors, such as incorrect reasoning or biases in opinions, which can also lead to misinformation.

Implementing CoVe increases computational costs because it generates more text for verification, which could limit its use in environments with restricted computing resources.

The effectiveness of CoVe is inherently limited by the base language model's capabilities, suggesting that without further advancements in language models, there's a ceiling to how much CoVe can improve response accuracy.

Conclusion

The Chain-of-Verification (CoVe) method successfully reduces mistakes in large language models by making them check their work through a series of steps, leading to more accurate information being generated.

CoVe's approach of asking and answering verification questions helps in significantly lowering the occurrence of incorrect facts, known as hallucinations, in the model's responses.

Despite its effectiveness, CoVe does not completely eliminate errors and still has limitations, such as not addressing all types of inaccuracies and increasing computational costs.

The paper suggests that further improvements could be made by combining CoVe with external tools for verification, indicating a direction for future research to enhance the accuracy of language models.

How Chain-of-Verification prompting is different from other prompting techniques?

CoVe uniquely breaks down the verification process into steps where the model first drafts a response, then creates and answers its own verification questions to check for mistakes before finalizing the response, unlike traditional prompting that typically involves a single step of generating a response based on the input prompt.

Unlike other methods, CoVe employs a "factored" approach where it deliberately avoids letting the verification questions be influenced by the initial response, aiming to reduce the repetition of errors and improve the accuracy of the final output.

CoVe introduces an explicit reasoning step, "factorrevise," where after answering verification questions, it cross-checks for inconsistencies, a step not commonly found in other prompting techniques which usually rely on the model's initial output without further verification.

What are different types of hallucination mentioned in this paper?

Plausible but Incorrect Information: This type of hallucination involves the generation of information that seems believable but is actually false. It's a common problem in large language models where the generated content sounds right but doesn't match real facts.

Longform Generation Errors: When language models try to create longer pieces of text, they often make more mistakes. These errors can include making up facts or details that aren't true, which is a specific challenge when the model tries to generate complex or extended content.

List-based Question Mistakes: In tasks where the model has to generate a list of items, such as names or entities, based on a query, it can sometimes include items that don't belong or omit correct ones, leading to inaccuracies in the generated lists.

Paper Infographic