DeepSeek-R1: Incentivizing Reasoning capability in LLMs via Reinforcement Learning

Series on reasoning based LLMs

This is a non-technical explanation of the original paper - DeepSeek-R1: Incentivizing Reasoning Capability in LLMs via Reinforcement Learning

Abstract:

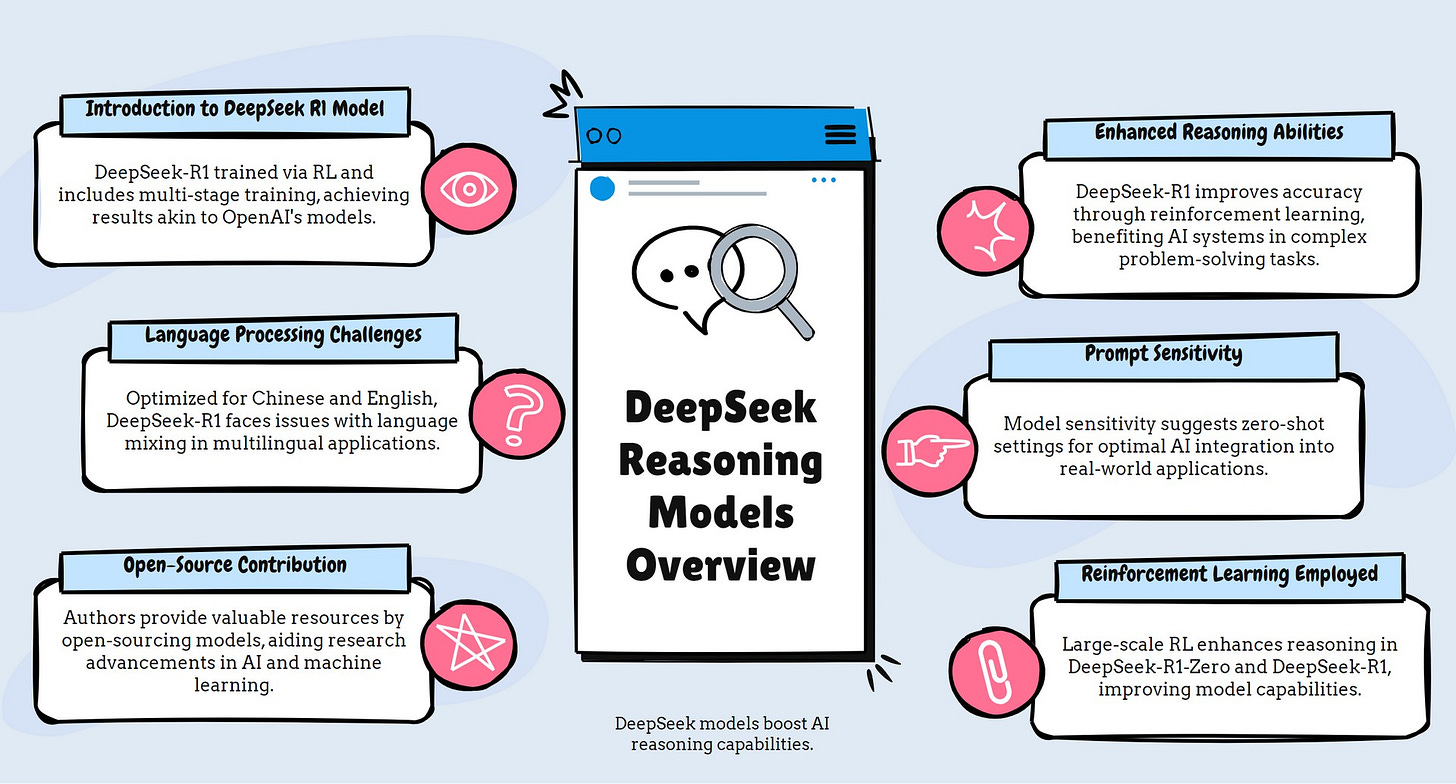

The paper introduces two reasoning models: DeepSeek-R1-Zero and DeepSeek-R1. DeepSeek-R1-Zero is trained using large-scale reinforcement learning (RL) without supervised fine-tuning, showcasing strong reasoning capabilities.

Despite its strengths, DeepSeek-R1-Zero faces challenges like poor readability and language mixing. To address these, DeepSeek-R1 incorporates multi-stage training and cold-start data before RL, achieving performance comparable to OpenAI's o1-1217 models.

The authors open-source both models and six distilled dense models, ranging from 1.5B to 70B parameters, based on Qwen and Llama

Practical Implications:

Enhanced Reasoning Capabilities: The paper introduces DeepSeek-R1, which improves reasoning abilities in language models through reinforcement learning, potentially leading to more accurate and reliable AI systems for complex tasks.

Language Processing: DeepSeek-R1 is optimized for Chinese and English, but it faces challenges with language mixing when handling other languages. This highlights the need for further development to support multilingual applications effectively.

Prompt Sensitivity: The model's sensitivity to prompts suggests that users should employ zero-shot settings for optimal performance, impacting how AI systems are integrated into real-world applications.

Open-Source Contribution: By open-sourcing their models, the authors provide valuable resources for the research community, facilitating further advancements in AI and machine learning

Methodology:

Reinforcement Learning (RL): The paper employs large-scale RL to enhance the reasoning capabilities of the models, particularly in DeepSeek-R1-Zero and DeepSeek-R1.

Cold Start Data Collection: To stabilize the initial RL training phase, the authors collect a small amount of long Chain-of-Thought (CoT) data to fine-tune the model as the initial RL actor.

Supervised Fine-Tuning (SFT): After RL convergence, SFT is used to incorporate data from various domains, enhancing the model's general-purpose capabilities.

Prompting Techniques: Few-shot and zero-shot prompting are explored, with a recommendation for zero-shot settings to avoid performance degradation.

Rejection Sampling: This technique is considered for future improvements, particularly in software engineering tasks, to enhance efficiency during the RL process

Limitations:

General Capability: DeepSeek-R1's capabilities are not as advanced as DeepSeek-V3 in tasks like function calling, multi-turn interactions, complex role-playing, and JSON output.

Language Mixing: The model is optimized for Chinese and English, which can lead to language mixing issues when handling queries in other languages.

Prompt Sensitivity: DeepSeek-R1 is sensitive to prompts, with few-shot prompting degrading performance. A zero-shot setting is recommended for optimal results.

Software Engineering Tasks: The model has not shown significant improvement over DeepSeek-V3 in software engineering benchmarks due to long evaluation times affecting RL efficiency.

Readability Issues: DeepSeek-R1-Zero struggles with readability and language mixing, which are addressed in DeepSeek-R1 through the use of cold-start data

Conclusion:

Reinforcement Learning Success: The paper highlights the success of using reinforcement learning (RL) to enhance model reasoning abilities, with DeepSeek-R1-Zero achieving strong performance without cold-start data.

Performance Comparison: DeepSeek-R1 achieves performance comparable to OpenAI-o1-1217 across various tasks, demonstrating its effectiveness.

Distillation to Smaller Models: The reasoning capabilities of DeepSeek-R1 were successfully distilled into smaller models, with DeepSeek-R1-Distill-Qwen-1.5B outperforming GPT-4o and Claude-3.5-Sonnet on math benchmarks.

Future Directions: The paper outlines plans to improve general capabilities, address language mixing issues, refine prompt engineering, and enhance performance in software engineering tasks

Paper Infographic