Self-Refine Prompting

Prompt engineering series on techniques eliciting reasoning capabilities of LLMs

Original Paper: SELF-REFINE: Iterative Refinement with Self-Feedback

Abstract

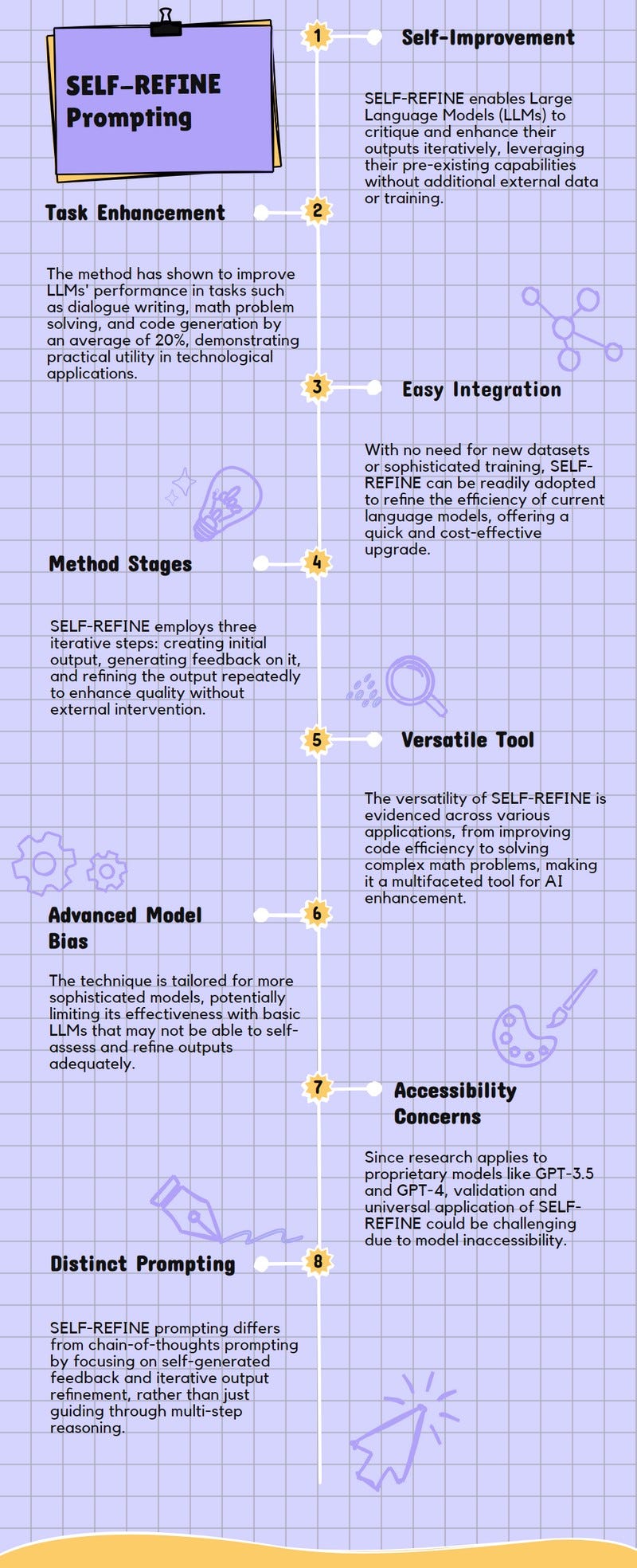

This paper introduces SELF-REFINE, a method where an LLM improves its own work by giving itself advice and making changes based on that advice, without needing extra help or learning from new examples.

By applying SELF-REFINE to different tasks, like writing dialogue or solving math problems, the LLM gets better at these tasks by about 20% on average, showing that even the most advanced LLMs can still improve with this simple technique.

Practical Implications

This paper's approach, SELF-REFINE, allows LLMs to improve their own work without needing new examples or extra training, making it easier and cheaper to get better results from existing technology.

By applying SELF-REFINE, tasks like writing dialogue, solving math problems, or generating code can see significant improvements, which means these programs can be more helpful in real-world applications, like creating better chatbots or more efficient software.

Since SELF-REFINE doesn't require new data or complex training methods, researchers and developers can use this technique to enhance the performance of language models quickly and with less effort, potentially speeding up the development of new applications and technologies.

The simplicity and effectiveness of SELF-REFINE in improving the output of language models suggest that even advanced technologies can benefit from straightforward enhancements, encouraging further research and innovation in the field.

Methodology

SELF-REFINE starts by using a large language model to create an initial output, then it gives itself feedback on that output and uses this feedback to make the output better, doing this over and over again until it's good enough.

The process includes three main steps: generating feedback on the initial output, refining the output based on this feedback, and repeating these steps iteratively to gradually improve the quality of the output.

This method does not require new data for training or complex reinforcement learning techniques; it relies solely on the language model's ability to generate, critique, and refine its outputs using the feedback it provides to itself.

SELF-REFINE's effectiveness is tested across various tasks, showing that it can significantly enhance the performance of language models without additional training or data, making it a practical tool for improving AI-generated content.

Limitations

The method relies heavily on the language model's ability to understand and follow instructions, which means it might not work as well with simpler models that can't give or use feedback properly.

Since the research was done using models that aren't shared publicly, like GPT-3.5 and GPT-4, it's hard for other people to check the work or try it out without spending money.

The paper only looks at tasks in English, so it's not clear if SELF-REFINE would help with tasks in other languages or stop bad uses, like making harmful content.

In some areas, like making computer code better or changing the feeling of a text, the method needs very specific feedback to work well, which might not always be possible.

Conclusion

SELF-REFINE is a new way to make language models better by letting them check and improve their own work without needing new data or complex training methods. This approach can make models like GPT-4 even better at tasks they already do well.

The method shows promise across a variety of tasks, including making computer code more efficient and understanding math problems better, proving that it's a versatile tool for enhancing AI outputs.

However, SELF-REFINE's success depends on the model's ability to give useful feedback and make good changes based on that feedback. It works best with advanced models and might not be as effective with simpler ones.

Despite its benefits, the approach has limitations, such as relying on models that are not freely available, which could make it hard for everyone to use or test this method.

How SELF-REFINE Prompting is different from Chain-of-Thoughts Prompting?

SELF-REFINE prompting involves a model generating an initial output, then providing feedback on its own output, and using this feedback to iteratively refine the output until a desired quality is achieved. This process is self-contained, using the same model for generation, feedback, and refinement without external inputs.

Chain-of-thoughts prompting, on the other hand, guides a model to solve complex problems by breaking them down into simpler, intermediate steps or thoughts. It focuses on making the reasoning process explicit, which helps in tackling tasks that require multi-step reasoning but does not inherently include a mechanism for self-feedback or iterative refinement based on such feedback.

The key difference lies in SELF-REFINE's unique approach of self-assessment and iterative improvement, contrasting with the chain-of-thoughts method, which primarily aims to enhance problem-solving by structuring the thought process rather than refining the output through feedback loops.

Paper Infographic