Tree of Thoughts Prompting

Prompt engineering series on techniques eliciting reasoning capabilities of LLMs

Original Paper: Tree of Thoughts: Deliberate Problem Solving with Large Language Models

Abstract

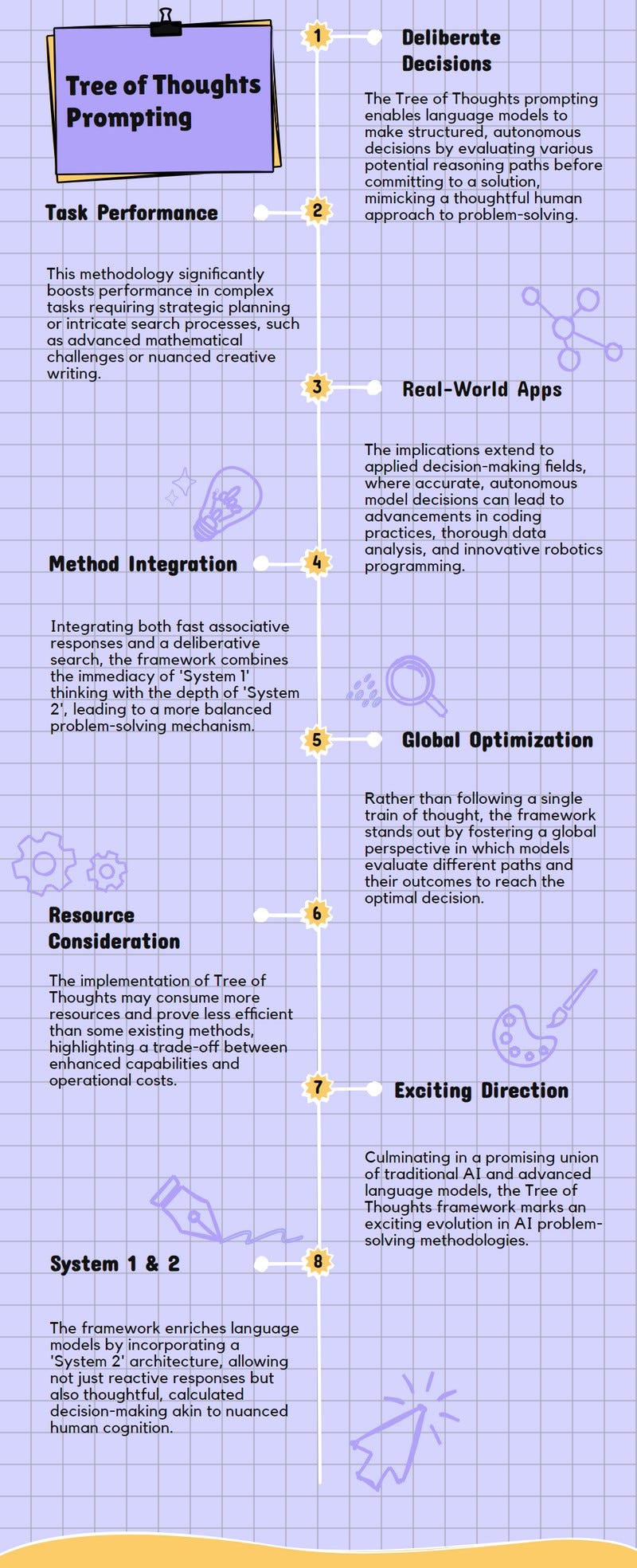

The Tree of Thoughts framework enhances language models' problem-solving abilities by enabling deliberate decision-making through exploration over coherent units of text.

It allows language models to consider multiple reasoning paths, self-evaluate choices, and make global decisions, significantly improving performance on tasks requiring planning or search.

Practical Implications

The Tree of Thoughts framework enhances language models' problem-solving abilities on tasks requiring planning or search, such as Game of 24 and Creative Writing.

It empowers language models to make more autonomous and intelligent decisions, improving interpretability and human alignment in model decisions.

The framework could be beneficial for real-world decision-making applications like coding, data analysis, and robotics, opening up opportunities for further research in these areas

Methodology

The Tree of Thoughts framework enables language models to perform deliberate decision-making by considering multiple reasoning paths and self-evaluating choices.

The framework allows exploration over coherent units of text, serving as intermediate steps toward problem-solving.

The approach can be considered a modern rendition of classical search methods for problem-solving, incorporating heuristics and value feedback.

The Tree of Thoughts framework augments the associative 'System 1' of language models with a 'System 2' based on searching a tree of possible paths to the solution

Limitations

The Tree of Thoughts framework may not be necessary for tasks where existing language models like GPT-4 already excel.

The study only explores relatively simple tasks, leaving room for further investigation into more complex applications.

Implementing the Tree of Thoughts method may require more resources compared to sampling methods, potentially impacting cost and efficiency.

Conclusion

The Tree of Thoughts framework enhances language models' problem-solving abilities on tasks requiring planning or search.

The framework empowers language models to make decisions autonomously and intelligently.

The intersection of language models with classical AI approaches is seen as an exciting direction.

How tree of thoughts framework is different from chain-of-thought prompting?

Tree of Thoughts allows for exploration over coherent units of text, serving as intermediate steps towards problem-solving.

Chain-of-Thought prompting involves a chain of coherent language sequences bridging input and output, sampled sequentially.

Tree of Thoughts enables deliberate decision-making by considering multiple reasoning paths and self-evaluating choices, allowing for global choices.

Chain-of-Thought prompting samples thoughts coherently without explicit decomposition, leaving the decomposition of thoughts ambiguous.

How tree of thoughts framework is different from self-consistency prompting?

Tree of Thoughts allows language models to explore multiple reasoning paths over thoughts in a tree structure.

Self-Consistency Prompting samples chains of coherent thoughts and returns the most frequent output, exploring a richer set of thoughts.

Paper Infographic